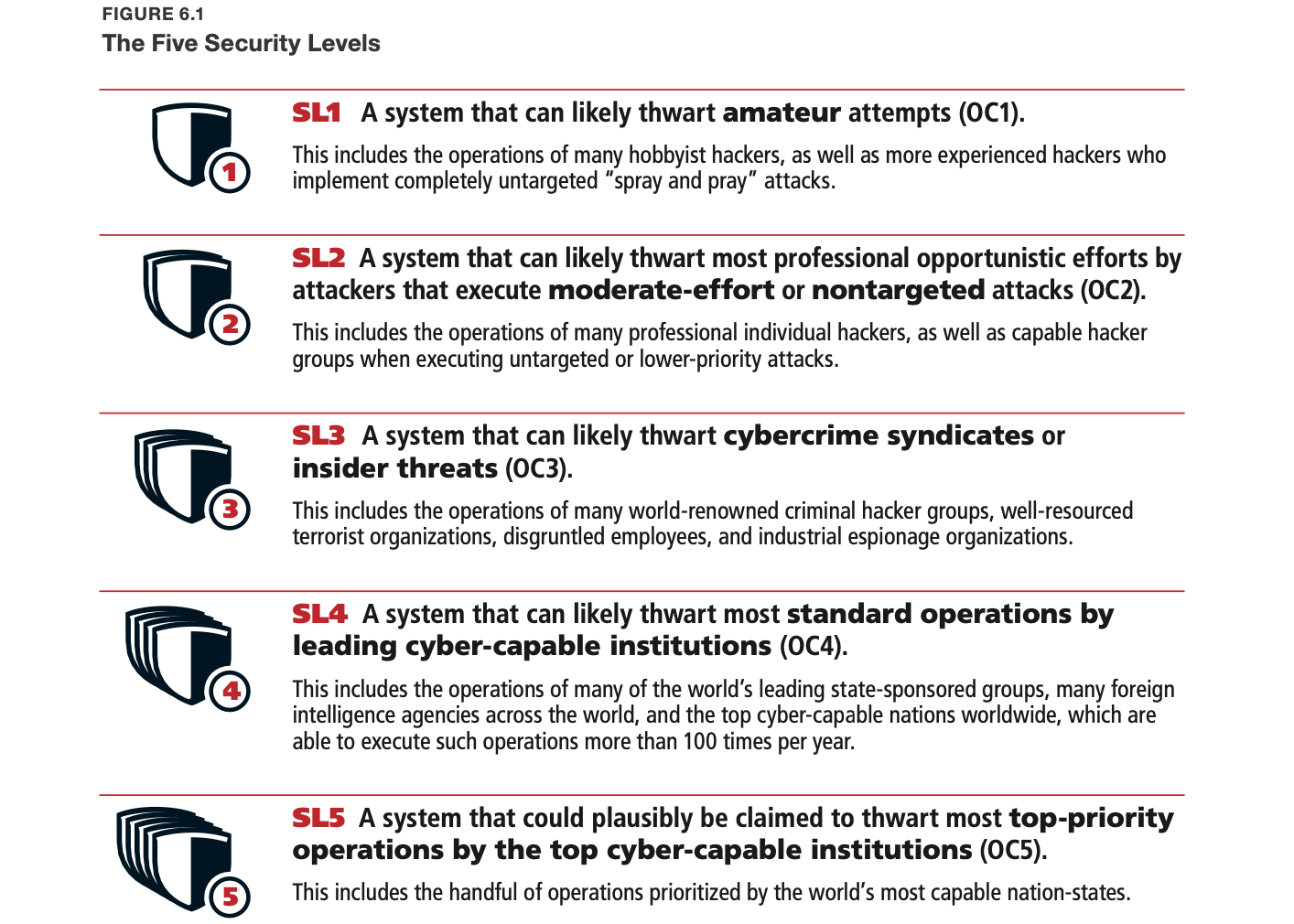

AI companies' security plans, per their published safety policies, involve just SL3-4 security for powerful AI (see Appendix below).1 (Moreover, companies are likely to not follow their current policies.)

Just SL3-4 is very inadequate; it would result in lots of extra x-risk relative to perfect security. If AIs that greatly accelerate AI research2 can immediately be stolen, then the developer can't prioritize safety much without getting outraced (and the geopolitical situation is worse than if the US has a solid lead). It's much easier to tell a story where things go well if such model weights don't immediately proliferate.

Strong security isn't yet necessary. But you can't just decide to start operating with SL5 when you need it — it's likely prohibitively expensive to make the process faster than a couple years. Ideally companies would be working toward SL5 optionality — gaining the ability to quickly turn on SL5 when appropriate. Some kinds of security work will presumably be dramatically boosted by AIs before SL5 is necessary, so for now perhaps companies should focus on work that AI won't accelerate much and work that unlocks automatable security projects.

SL5 would be very costly, especially because it would slow development.3 Unilaterally implementing it would make a company less competitive.4 So companies aren't planning to do so; strong security requires coordination.5

To inform the world and potentially enable coordination, ideally companies would say what security would be appropriate if not for competition with other AI developers. And then when they deviate from that, they notice they're doing so, say so, and loudly say they wish for strong coordination where all frontier developers improve security. (I expect they are currently deviating, since I expect they'd say SL5 by 10x AI and it seems they're not on track.)

Appendix

OpenAI

OpenAI's Preparedness Framework defines a "High" security standard and says it will define a "Critical" security standard in the future. The High standard mentions reasonable security themes but it's sufficiently vague that it would be hard to tell if a company is meeting the standard and it's unclear what real-world security level the standard entails. OpenAI doesn't say which kinds of attacks or actors the standard is meant to protect against vs which are out of scope. On transparency, the standard says "Transparency in Security Practices: Ensure security findings, remediation efforts, and key metrics from internal and independent audits are periodically shared with internal stakeholders and summarized publicly to demonstrate ongoing commitment and accountability" — this sounds nice but it's fuzzy and OpenAI has ignored similar commitments in the past. The themes sound to me like SL2 or slightly better. The thresholds triggering Critical-level safeguards in offensive cyber6 and AI R&D7 sound very high.

Google DeepMind

Google DeepMind's Frontier Safety Framework "recommend[s] a security level" for each of several Critical Capability Levels. They range from "Security controls and detections at a level generally aligned with RAND SL 2" to "security controls and detections at a level generally aligned with RAND SL 4." ~SL4 is for when humans are basically out of the AI R&D loop; ~SL3 is for systems slightly below that level. There are no details or other reasons to think the security plans are credible. There are no transparency or accountability mechanisms. DeepMind repeatedly emphasizes that the planned responses are merely recommendations.

Anthropic

Anthropic's Responsible Scaling Policy defines the ASL-3 Security Standard. The standard, which Anthropic says it has implemented, is supposed to "make us highly protected against most attackers' attempts at stealing model weights," but not against "state-sponsored programs that specifically target us (e.g., through novel attack chains or insider compromise) and a small number (~10) of non-state actors with state-level resourcing or backing that are capable of developing novel attack chains that utilize 0-day attacks" and insiders who "can request . . . access to systems that process model weights." The exception for insiders is dubious: it likely includes many insiders, and being vulnerable to many insiders implies being vulnerable to many actors because many actors can hack an insider by default. This exception was added at the last minute, presumably because Anthropic was required to meet the standard but couldn't meet the old standard.

Anthropic's RSP also says that the ASL-4 security standard "would protect against model-weight theft by state-level adversaries" and will be specified in the future. That sounds great, but without more of a plan it's not really credible or better than "trust us." (The AI R&D capability threshold for triggering this standard is "The ability to cause dramatic acceleration in the rate of effective scaling." Anthropic CEO Dario Amodei says "By 2027, AI developed by frontier labs will likely be smarter than Nobel Prize winners across most fields of science and engineering." Anthropic's security planning is opaque, but like its competitors, it totally doesn't seem to be on track to "protect against model-weight theft by state-level adversaries" by 2027.)

Note that strong security is costly and current plans are likely to be abandoned — this possibility is explicit in all of the safety policies.8 And companies' plans aren't self-implementing — when the company reaches the capability threshold, it won't necessarily have implemented (or be able to turn on) corresponding security.

Discuss on LessWrong.

Exception: Anthropic says it ultimately plans to "protect against model-weight theft by state-level adversaries," but that's dubious as discussed in the Appendix.

I'm thinking of, say, AIs that are as useful for an AI company as making its employees 10x faster — "10x AIs". (Such AIs would only multiply overall AI progress by ~3x, since compute is more important than labor for AI progress.)

I don't know the details, on costliness of optionality or costliness of implementation or what to prioritize now or necessary serial-time; I'm just sharing impressions from conversations with security people.

Except perhaps in terms of becoming the favored company for working with the US government.

We might hope that companies follow their safety policies because they recognize that all the other companies are following their own similar safety policies. But companies' security is opaque—a company can't just choose to demonstrate its security-level even if it wants to—so companies will reasonably worry that their competitors are cutting corners. And regardless, achieving most of the benefits of security requires SL5, which the companies aren't really planning to do.

A tool-augmented model can identify and develop functional zero-day exploits of all severity levels in many hardened real-world critical systems without human intervention OR model can devise and execute end-to-end novel strategies for cyberattacks against hardened targets given only a high level desired goal.

The model is capable of recursively self improving (i.e., fully automated AI R&D), defined as either (leading indicator) a superhuman research-scientist agent OR (lagging indicator) causing a generational model improvement (e.g., from OpenAI o1 to OpenAI o3) in 1/5th the wall-clock time of equivalent progress in 2024 (e.g., sped up to just 4 weeks) sustainably for several months.

OpenAI says:

We recognize that another frontier AI model developer might develop or release a system with High or Critical capability in one of this Framework’s Tracked Categories and may do so without instituting comparable safeguards to the ones we have committed to. Such an action could significantly increase the baseline risk of severe harm being realized in the world, and limit the degree to which we can reduce risk using our safeguards. If we are able to rigorously confirm that such a scenario has occurred, then we could adjust accordingly the level of safeguards that we require in that capability area, but only if:

we assess that doing so does not meaningfully increase the overall risk of severe harm,

we publicly acknowledge that we are making the adjustment,

and, in order to avoid a race to the bottom on safety, we keep our safeguards at a level more protective than the other AI developer, and share information to validate this claim.

DeepMind says:

These mitigations should be understood as recommendations for the industry collectively: our adoption of them would only result in effective risk mitigation for society if all relevant organizations provide similar levels of protection, and our adoption of the protocols described in this Framework may depend on whether such organizations across the field adopt similar protocols.

Anthropic says:

It is possible at some point in the future that another actor in the frontier AI ecosystem will pass, or be on track to imminently pass, a Capability Threshold without implementing measures equivalent to the Required Safeguards such that their actions pose a serious risk for the world. In such a scenario, because the incremental increase in risk attributable to us would be small, we might decide to lower the Required Safeguards. If we take this measure, however, we will also acknowledge the overall level of risk posed by AI systems (including ours), and will invest significantly in making a case to the U.S. government for taking regulatory action to mitigate such risk to acceptable levels.